While “computers” – devices to assist humans in calculations – have been around for thousands of years, the modern computer age is less than a century old. And the pace of technological developments, along with the degree to which people have found “smart electronics” woven into so many aspects of everyday life, makes this an important topic.

Some of the best-known examples are computers used during World War II to decrypt military ciphers, although they were very specialist in nature. It wasn’t until the development of “stored-program computers” and the adoption of von Neumann architecture (remember that for your next pub quiz!) that computing started to move into more general use, albeit still confined to business and academia.

It’s hard to overstate the importance of this shift to stored programs. Calculating machines were already in very common use in some areas, with Herman Hollerith’s “electric counting machines” successfully assisting with the US Census of 1890. Herman Hollerith went on to found what eventually became “International Business Machines” or, more commonly today, IBM.

HCI Professor Alan Dix picks up the story from the 1950s post-war era.

Show

Hide

video transcript

- Transcript loading…

Video copyright info

Copyright holder: Tim Colegrove _ Appearance time: 3:02 - 3:09 Copyright license and terms: CC BY-SA 4.0, via Wikimedia Commons _ Link: https://commons.wikimedia.org/wiki/File:Trinity77.jpg

Copyright holder: Mk Illuminations _ Appearance time: 6:30 - 6:40 _ Link: https://www.youtube.com/watch?v=4DD5qLvHANs

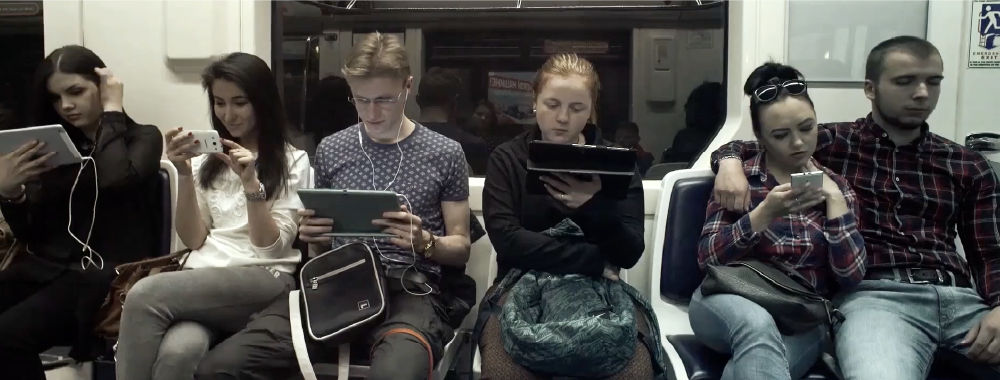

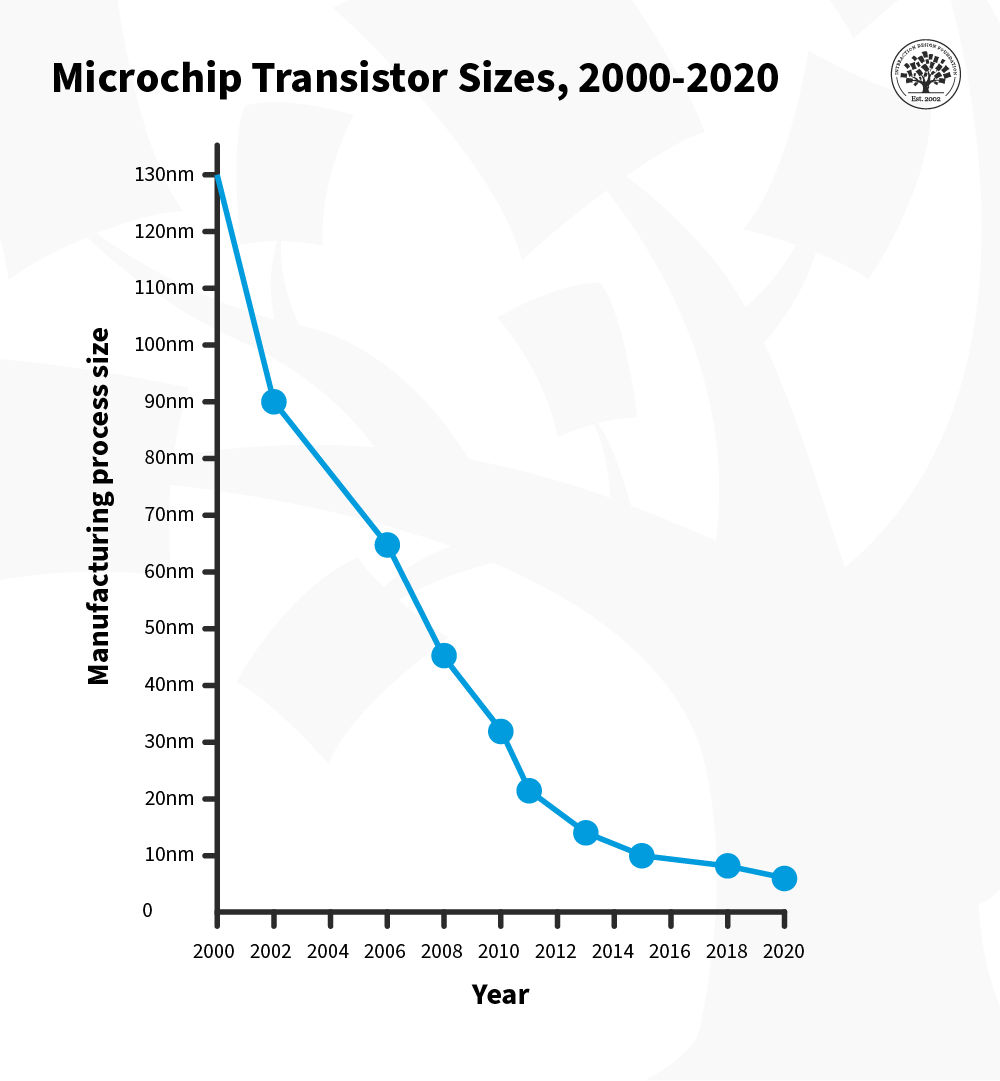

In the clip we saw computers reducing in size from numerous cabinets, occupying large suites of rooms to less than the size of a postage stamp. Even since the beginning of the current millennium, the shrinking of computer technology continues apace:

Microchip transistor sizes 2000-2020.

©Eduardo Ferreira and Interaction Design Foundation, CC BY-SA 3.0

What does that mean for where we find computers and how we use them? Alan has some ideas…

Show

Hide

video transcript

- Transcript loading…

One of many interesting threads that Alan mentions in the next clip is the changing gender roles in computing. This story is told well in Nathan Ensmenger’s The Computer Boys Take Over.

In the early days of computing, programming was seen as a primarily clerical task and, not surprisingly for the time, a role that was primarily filled by women.

The Computer Boys Take Over (Nathan L. Ensmeger)The MIT Press, Fair Use (link)

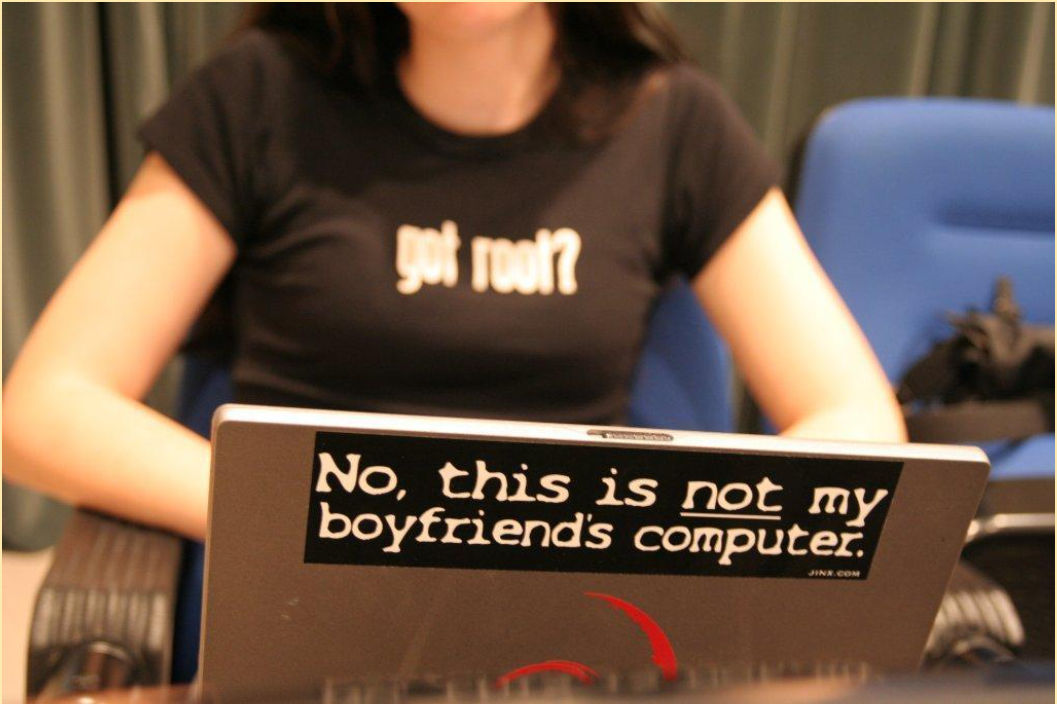

But as it became increasingly apparent that programming was skilled work that required better pay, it became a male domain. So much so that by the late 1980s and early 1990s the vast majority of students attending programming courses and computer science degree programs were men. Happily, this is balancing out over time, although significant disparities still exist.

“No, this is not my boyfriend’s computer.”

“No, this is not my boyfriend’s computer.”Flickr, Public Domain, CC BY 2.0 (link)

Let Alan describe some of the other issues…

Show

Hide

video transcript

- Transcript loading…

Video copyright info

Copyright: NASA Ames Resarch Center (NASA-ARC) _ Appearance time: 0:15 - 0:17 Copyright license and terms: Public domain, via Wikimedia Commons _ Link: https://commons.wikimedia.org/wiki/File:IBM_7090_computer.jpg

Copyright: NASA _ Appearance time: 0:17 - 0:20 Copyright license and terms: Public domain, via Wikimedia Commons _ Link: https://fr.m.wikipedia.org/wiki/Fichier:NASAComputerRoom7090.NARA.jpg

Copyright: Sailko _ Appearance time: 1:00 - 1:04 Copyright license and terms: CC BY 3.0, via Wikimedia Commons _ Link: https://commons.wikimedia.org/wiki/File:Apple_III,_personal_computer,_1980.jpg

Copyright: Suspiciouscelery _ Appearance time: 1:33 - 1:37 Copyright license and terms: CC BY-SA 4.0, via Wikimedia Commons Link: https://commons.wikimedia.org/wiki/File:Man_using_AutoCAD_(1987).jpg

Copyright: Paul Clarke _ Appearance time: 3:39 - 3:48 Copyright license and terms: CCO, via Wikimedia Commons _Link: https://commons.wikimedia.org/wiki/File:Sir_Tim_Berners-Lee.jpg

Images

©Eduardo Ferreira and Interaction Design Foundation, CC BY-SA 3.0