User interfaces that you interact with using modalities such as touch, gestures or voice are often referred to as Natural User Interfaces (NUI). We consider them interfaces that we find so easy to use that they feel natural. However, what does it mean for an interface to be natural? Also, how do you design NUI interfaces? Here, we’re going to explore what NUIs are as well as a set of guidelines for how to design them; this is where you can get a deep appreciation for the most vital aspect of any interface, and incorporate that into your future work.

What is a Natural User Interface?

“Until now, we have always had to adapt to the limits of technology and conform the way we work with computers to a set of arbitrary conventions and procedures. With NUI, computing devices will adapt to our needs and preferences for the first time and humans will begin to use technology in whatever way is most comfortable and natural for us.”

—Bill Gates, co-founder of the multinational technology company Microsoft

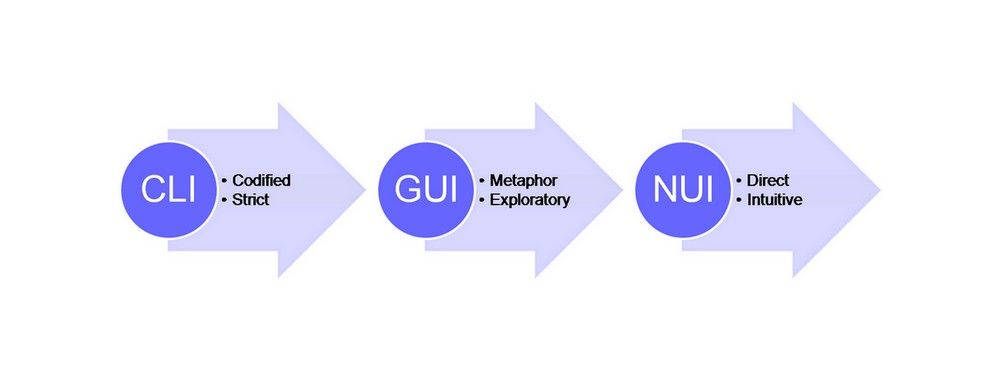

Defining NUIs is difficult, but often when we think about user interfaces that are natural and easy to use, we think of user interfaces where the interaction is direct and consistent with our ‘natural’ behaviour. Examples of user interfaces that people often refer to as natural are multi-touch on the Apple iPad or using mid-air body gestures to control Microsoft’s Kinect console. NUIs have been called the next step in the evolution of user interfaces. The advantage of NUIs is that the user interaction feels fun, easy and natural because the user can use a broader range of basic skills compared to more traditional graphical user interface interaction – which mainly happens through a mouse and a keyboard.

Too often, people think that if they just use—for example—gesture interaction, the user interface will be natural. Nevertheless, if we consider something on the order of multi-touch gestures on the Apple iPad, we can see that the reality is somewhat more complex. Some iPad gestures come naturally and intuitively—e.g., swiping with one finger to the left or right—which is all part of the ‘magic’ that took the iPad to such prominence. When you swipe with one finger, you scroll through pages or you move content from one side of the screen to the other. The gesture itself corresponds to the action you are performing. Also, as it’s in keeping with how you would move, say, a plastic slide-control on an old central-heating system, it shows the power of the principle here—things in the ‘digital world’ behave as they do in the ‘analogue world’.

Some gestures, though, require more learning—e.g., a four-finger swipe to the left or right. When you swipe to the left or right with four fingers, you will switch from one app to the next. The four-finger swipe is not intuitive—it doesn’t come naturally to us. Swiping with four fingers requires you as a user to learn it as a dedicated movement, because you need an understanding of the underlying system so as to understand the connection between the gesture and the action you are performing. If NUIs are not just created by using modalities that come naturally to the user, how can we define what they are?

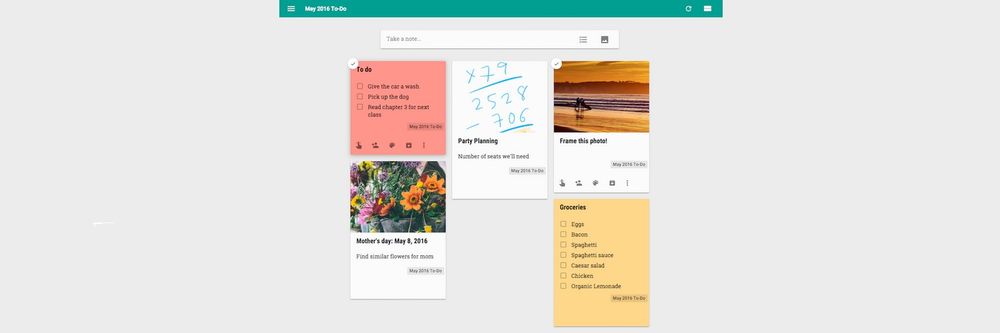

![]() Author/Copyright holder: Intel Free Press. Copyright terms and licence: CC BY-SA 2.0

Author/Copyright holder: Intel Free Press. Copyright terms and licence: CC BY-SA 2.0

The touch user interface of the iPad is often referred to as an example of a Natural User Interface.

“Voice, gesture, touch does not necessarily Natural User Interface make.”

—Bill Buxton, Principal Researcher at Microsoft

Microsoft has done a lot of research into NUIs. We have based most of what you see here on their definition of and guidelines for them. Bill Buxton, a principal researcher at Microsoft, says that NUIs “exploit skills that we have acquired through a lifetime of living in the world, which minimizes the cognitive load and therefore minimizes the distraction”. He also states that NUIs should always be designed with the use context in mind. No user interface can be natural in all use contexts and to all users. While gestures, voice and touch are important components of many NUIs, they will only feel natural to a user if they match her skill level and her use context. For example, Bill Buxton mentions that voice user interfaces are probably the most natural user interface we can use while driving a car, but they do not work well in situations when we have many people around us—e.g., on a crowded aeroplane where other people can hear what you are saying. As an NUI must match the user’s skill level and use context, we’ll find—given the technology we have in the early 21st century—designing and implementing interfaces that feel natural to all users a very difficult task. So, rather than try to design NUIs that are natural for all users, we should focus any NUI we design on specific users and contexts.

NUIs should fit the individual user and her use context if they’re going to feel natural to her. That may sound all fine and straightforward, but we could say that about many user interfaces; therefore, what other attributes should an NUI have? In an excerpt from the book, Natural User Interfaces in .NET, Joshua Blake lists four guidelines for designing NUIs:

Let’s now go through each guideline in turn to help you design great NUIs.

Instant Expertise

When you design an NUI, you should take advantage of the users’ existing skills. If users can apply skills they have from other areas of their lives, you’ll save them the trouble of learning something completely new. Once they realise what skills to use, the users can take advantage of their existing skills and expectations to interact with your NUI. There are two ways of doing this:

Common human skills refer to the things that most people know how to do—e.g., speaking. The advantage of designing your NUI so that it uses a common human skill is that you do not have to think as much about different user groups. You can assume that most of your users have the skill simply because they are human.

![]() Author/Copyright holder: Guillermo Fernandes. Copyright terms and licence: Public Domain Mark 1.0

Author/Copyright holder: Guillermo Fernandes. Copyright terms and licence: Public Domain Mark 1.0

The voice-controlled Amazon Echo Dot speaker is an example of a user interface that uses a common human skill. Its non-human appearance belies a very human ‘character’.

Domain-specific skills refer to skills of a specific user group. If you are designing a user interface for composing electronic music, you may assume that musicians know the different instruments, how to write notes, etc. That specific user group will find your user interface easier to use if it takes advantage of their existing knowledge rather than requiring them to learn something completely new.

When possible, though, you should always try to reuse common human skills in your NUI designs, since common skills will apply to a broad range of users. If you have a specific target group such as musicians in mind, they might also have different domain-specific skill sets. For example, some musicians can read notes and some cannot, instead relying on tablature or even replicating heard works by ear. This way, you will expand your target audience (and widen your market) to accommodate pretty much anyone who has a desire to compose music.

Progressive Learning

Your NUI should allow novice users to learn—and progressively so—how to use the user interface. Here, you need to lay out a clear learning path for users, one that allows them to start with basic skills and move on to something more advanced step by step, in increments. Never overwhelm novice users with too many options. Instead, keep it simple—think ‘baby steps’. This way of learning imitates the way we learn many physical skills. For instance, when we learn how to ski, we work our way up gradually. We start with basic skills such as standing up on level ground without falling over; then we might try a moderate downhill slope before doing anything more daring. If we were to start in the middle of a steep slope, learning would be a painful experience, and many—assuming they were still in a condition to—would give up.

While a progressive learning path is important to novices, not standing in the way of expert users is every bit as vital. These veteran users need—and should be allowed—to use the skills they already have. If expert users are forced to go through a long learning path, they will become frustrated. Think of Olympic-level skiers, seething in indignation from having to remain on the baby slopes when the passion for grand slaloming drives them. Joshua Blake suggests that you accomplish this compromise by breaking complex tasks into a subset of basic tasks. If you cannot avoid more complex tasks, you should keep them to a strict limit as best you can. Likewise, make sure they don’t feature in the basic NUI that the novice user encounters. If we go back to the skiing example, the act (and art) of skiing is built up from a subset of basic tasks. These are the same whether you are novice or expert. However, obviously, the expert skier can combine the basic skills in a far different way from how the novice uses these. Another example of this is computer games, where novice players start off with simple moves and progress to more complex combos that become available to them as they build up experience and can therefore fight more difficult enemies. Dropping them in at the deep end on the very first level would have players shaking their heads in disbelief—incidentally, this is why games designers typically offer various difficulty settings in the menu, but even the hardest ones tend to afford players some leeway so they can get to the second level, at least. Building up these basic tasks for the users of your designs is no different. Always apply this principle to any NUI you approach.

The Reactable is an electronic musical instrument with a tangible user interface that takes advantage of a common human understanding of physical objects. It works by placing and moving physical objects (tangibles) on the interactive surface. Both novices and experts can use it.

Direct Interaction

An NUI should imitate the user’s interaction with the physical world by having a direct correlation between user action and NUI reaction. Both the Apple iPad and the Microsoft Kinect are good examples of NUIs where the relation between our actions and what happens on the screen feels direct. Joshua Blake divides direct interaction into three parts:

Directness means that the user is physically close to (touching) the NUI he is interacting with, that NUI actions happen at the same time as user actions or that the motions of elements on the NUI follow the motions of the user. Some NUIs, such as the Apple iPad, contain all forms of directness, while others, such as the motion-sensing input device Microsoft Kinect, contain one or two directness elements.

![]() Author/Copyright holder: Waag Society. Copyright terms and licence: CC BY-NC-SA 2.0

Author/Copyright holder: Waag Society. Copyright terms and licence: CC BY-NC-SA 2.0

Microsoft’s Kinect console senses the users’ motion, allowing them to interact with content on the screen via movements. The interaction is not close to the screen, but it responds in real time and follows the motions of the user.

High-frequency interaction means that there is a constant flow of action and reaction between the user and the NUI. This imitates activities in the physical environment where we constantly receive feedback as to our balance, speed, temperature, etc. One way to achieve high-frequency interaction in an NUI is to follow the guidelines for creating directness. If the user can constantly see the direct consequence of her actions, she is also receiving constant feedback. Zooming in and out on Google Maps on a touch screen is a good example of a high-frequency interaction. The movements of the map closely follow the movements of the user’s fingers.

To avoid overwhelming the user in high-frequency interactions, it is important that your NUI also uses contextual interactions. Rather than showing all options at once, the NUI should primarily show information that is relevant to the user’s current interaction. Google Maps, for instance, does this by only showing the scale of the map when the user is zooming in or out. The scale disappears shortly after the user has stopped zooming. The user doesn’t need much time to register the point that he’s moved from, say, a 1-cm:50-mile to a 1-cm:5-mile representation.

![]() Author/Copyright holder: Google Incorporated. Copyright terms and licence: Fair Use.

Author/Copyright holder: Google Incorporated. Copyright terms and licence: Fair Use.

On a touch screen device, Google Maps only shows the scale of the map when the user is zooming. The scale disappears shortly after the user has stopped zooming.

Cognitive load

If users find interaction with an interface difficult, their mental effort or cognitive load is high. We don’t want the users to have to keep thinking about how to manipulate the interface but instead to focus on achieving a task – hence we want to keep the cognitive load to a minimum. To ensure that the user’s cognitive load is at a minimum, you should design your NUI so that the user primarily applies basic knowledge and simple skills during the interaction. This will ensure that the interface is easy to use and learn. A good example of basic knowledge is our understanding of objects in the physical world. We understand how physical objects behave from infancy—and when user interfaces take advantage of this knowledge by having graphical user interface objects that behave in the same manner, we know exactly what to expect from those interfaces. If your specific user group already have complex skills that they could use to interact with your NUI, you should prioritize teaching the users simple skills rather than having them use their existing complex skills. In this sense, the Cognitive load guideline is in opposition to the Instant expertise guidelines; nevertheless, basic skills require less effort in the long run and allow you to target a broader user group.

The Take Away

An NUI is a user interface that feels natural to use because it fits the skills and context of the user. Below are the four guidelines for designing NUIs.

An NUI should take advantage of the users’ existing skills and knowledge.

An NUI should have a clear learning path and allow both novice and expert users to interact in a natural way.

Interaction with an NUI should be direct and fit the user’s context.

Whenever possible, you should prioritize taking advantage of the user’s basic skills.

Remember, the proof of your NUI’s success will be a matter that users will decide for you in a very short time on accessing your design. So, keep their identity, needs and context in mind every step of the way. Just as importantly, stay mindful of how ‘clever’ an aspect of your NUI actually is—great design is about satisfying needs, not outsmarting users.

References & Where to Learn More

Joshua Blake, Natural User Interfaces in .Net, 2011

You can read an excerpt from the book and more discussion of how to design NUIs here.

To learn more about NUIs, you can also read: Daniel Wigdor and Dennis Wixon, Brave NUI World: Designing Natural User Interfaces for Touch and Gesture, 2011

Hero Image: Author/Copyright holder: August de los Reyes. Copyright terms and licence: Public Domain.