Unmoderated Remote Usability Testing (URUT) is a technique designed to help you overcome the downsides of moderated usability testing. While moderated usability testing is undeniably useful it suffers from the fact that it’s time consuming, it takes a lot of effort to recruit participants, the costs (due to all that time input) are usually too high to conduct moderated testing with large numbers of users and users are observed outside of their usual environment (which may change their behaviours).

About URUT

URUT is designed for usability testing for products or interfaces. That means it measures how satisfied (or not satisfied) a user is with the interface and operability of the product.

The idea is that participants will work through a task (or tasks) in their usual environment without the need for a moderator to be present. These tasks are presented to the user via an online platform.

Data is captured from URUT in one of two ways. The first is via click-stream and in this instance URUT often resembles a survey and captures quantitative data for researchers. The second is via video and will provide a more qualitative insight into user behavior.

Click-stream offers fast data capture and easy analysis but video offers deeper insight into user behavior. It is possible to combine the two approaches but the tests need careful design to benefit from this approach.

![]()

Author/Copyright holder: Frits Ahlefeldt-Laurvig. Copyright terms and licence: CC BY-NC-ND 2.0

It’s definitely easier to involve a user in Unmoderated Remote Usability Testing than it is to get them involved in lab moderated testing.

When Should I Use URUT Rather than Moderated Testing?

A lot depends on the needs of your usability tests but some of the more common scenarios include:

- Competitor benchmarking. URUT makes it easy to examine two different products and capture enough data to make informed decisions based on the differences discovered.

- Budget constraints. URUT is cheaper than moderated testing – if you don’t have the money for the latter the former may be a better approach.

- Tight deadlines. If you need the data in a hurry it’s much faster to set up URUT than moderated testing.

- Geographical constraints. If you want to test a global (or widely-dispersed) audience then URUT is likely to be easier and cheaper to implement (by far) than moderated testing.

- In the wild data. If the customer’s environment has a large influence over use then moderated testing is probably not the way to go.

- Large sample sizes. Without the need for moderation – automated remote testing can be scaled to deliver statistically significant data from the user base. Though it’s worth remembering that Steve Krug, the usability expert says; “Testing one user is 100 percent better than testing none.”

![]()

Author/Copyright holder: Cosmocatalano. Copyright terms and licence: CC0 1.0

When you’re trying to save money – don’t forget the quality triangle for good project results.

Considerations for Running URUT

There are many considerations to developing and running URUT for your products and some will be specific to your own needs but there are some general considerations for URUT too:

Prior to URUT

As with all forms of research; you need to be clear about the objectives of your research and what questions you expect to answer with the research. You can work with stakeholders to develop this understanding.

Participants

You’re also going to need to work out how to get people to take part in the study; your options include:

- E-mail. If you have a list of customer e-mail addresses you can e-mail them to ask them to take part.

- Pop-ups. If your customers are on your website; you can use a pop-up to redirect them to the study. The advantage of this approach is that you’re likely to get a very representative sample but the disadvantage is that it may distract them from shopping or other more valuable business activity.

- Accessing pre-built testing databases. You can pay to access other people’s testing databases if you don’t have your own. These resources can often be targeted quite specifically to ensure representative audiences.

- Social media. There’s nothing wrong with asking for volunteers on your social media channels either (if they’re active enough).

![]()

Author/Copyright holder: Jason Howie. Copyright terms and licence: CC BY 2.0

Assuming you have a large following on social media, it can be a great way to get participants involved in your URUT.

You may also need to offer participants an incentive to take part. The less involved the participant is with the product/service – the more of an incentive it usually takes to get them on board.

Task Design

You’ll need to be very clear about the tasks that participants are expected to complete. You must offer enough detail that they can do this without assistance (and you may need dummy data to supply them with too – such as credit card data for a mock purchase).

Keep instructions as simple and minimal as possible and always include a call-to-action when necessary.

Survey Questions

You can also include survey questions in an URUT exercise:

- Closed questions following each task can help measure how participants are feeling about the usability of that task.

- Questions can be used at the end of the exercise to get a more general impression of the exercise.

- Questions can also be used to derive demographic data.

- Questions can also be used to test comprehension of content.

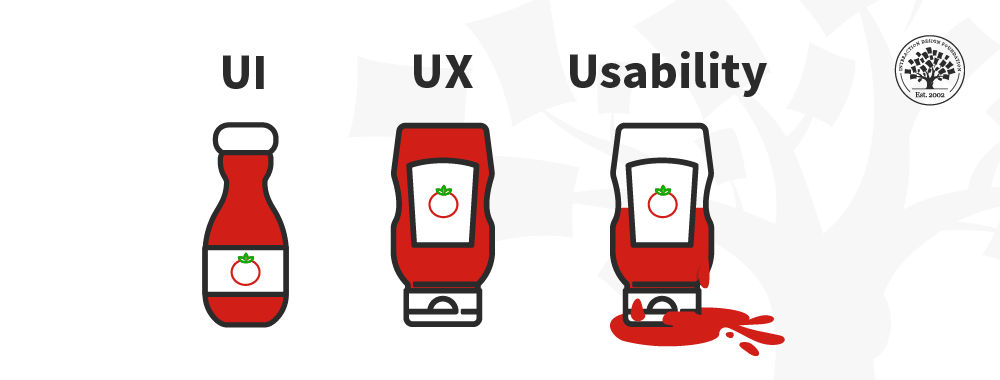

![]()

Author/Copyright holder: JayWalsh. Copyright terms and licence: CC BY-SA 3.0

Survey questions can provide additional insight into emotions or even unrelated topic areas during URUT. Wikipedia uses surveys, as seen here, to gauge how its editors feel about their work.

Delivery of the URUT Tool

Make sure that the tool is easy to access and to get to grips with without any support. You don’t want to introduce barriers to entry for participants.

Pilots

It can be very useful to test an URUT with a small number of participants and then evolve the URUT before launching it with a large audience.

Support

You should offer some telephone or e-mail support to participants for both the URUT exercise and if they have any questions after it has been completed.

Analysis

Once the URUT is complete – you need to analyze the data collected. You can apply any qualitative or quantitative technique that you require to get a clear understanding of results. It can be useful to define some headline metrics and measure these first to give quick results back to stakeholders.

The Take Away

URUT (Unmoderated Remote Usability Testing) can be a useful replacement for moderated usability testing in certain circumstances. The technique should be used with careful thought and have clear objectives before being employed. It’s an excellent addition to a usability tester’s or UX designer’s toolkit.

Resources

Course: Conducting Usability Testing:

https://www.interaction-design.org/courses/conducting-usability-testing

The Nielsen Norman Group offers some good tips on choosing URUT tools - https://www.nngroup.com/articles/unmoderated-user-testing-tools/

UX Matters examines the case for and against URUT here - http://www.uxmatters.com/mt/archives/2010/01/unmoderated-remote-usability-testing-good-or-evil.php

Hero Image: Author/Copyright holder: leisa reichelt. Copyright terms and licence: CC BY-SA 2.0