How to Supercharge Your Design Workflow with AI

- 492 shares

- 1 mth ago

Generative AI (Artificial Intelligence), refers to a class of algorithms and models designed to autonomously produce content, such as text, images, music, or other forms of data, by learning patterns and structures from vast datasets.

Unlike traditional AI systems that follow predefined rules, generative AI has the ability to create entirely new and often highly realistic outputs, generally in response to AI prompts or with prompt engineering. It operates at the intersection of creativity and artificial intelligence.

In this video, AI product designer Ioana Teleanu dives into generative AI and how to use it as a designer.

We're in the middle of an AI Revolution, and I think I can fairly compare it to the early days of the internet or the beginning of the mobile phone industry. The world is changing fundamentally and irreversibly. And the way we work and live is tremendously impacted, whether we want it or not. Why now, when AI has been around for so long? Well, it probably has to do with the impressive popularity and spectacular adoption rates

of recently launched AI tools. What this adoption story tells us is that people are extremely curious, interested and open to this new world of tools and capabilities; interest that's most probably stemming from a mix of enthusiasm, fear, reluctance and excitement. And most of these tools are based on *generative AI*. So, what is generative AI? We know that machine learning is a subset of AI, deep learning is a subset of machine learning,

and – you guessed it – generative AI is a subset of deep learning. To define it, putting it simply, generative AI is a type of artificial intelligence that creates new content based on what it has learned from existing content. Its main applications are in generating text, images or other media in response to prompts. How it works is that the model gets trained on data by applying machine learning techniques and is then able to create something entirely new, but with similar characteristics.

The main applications of generative AI are *language models* and *image models*. Language models learn about patterns in language through training data and then, given some text, they predict what comes next. Using text, these tools can generate new text, translations, summarizations, question answering, grammar correction, can generate images, videos, audio, speech

or decisions, tasks, play games. *Image models* produce new images using techniques such as *diffusion*, which works by destroying training data, by adding noise, and then learning to recover the data by reversing this noising process. Then, given a prompt, they transform random noise into images. By inputting images in generative AI tools, you can get text, image captioning, image search, visual question answering; *images*, image completion, super resolution, video, like animations.

The generative AI application landscape is already pretty vast, and we'll see more use cases and scenarios that these tools will address as technology and our understanding of it advance. Narrowing our scope to the design industry, some of the applications that help us most right now are *image generation* visual, mockups, icons, product illustrations, photography; *text generation* – UX copy, product descriptions, emails, documentation,

data augmentation. In cases where we have scarce research data, AI can help create synthetic data to supplement the real data. Not ideal – real data is the best, though. *Chatbots* for customer experience, and so on. The most prominent tools in generative AI are ChatGPT, Bard, Microsoft's Copilot, Midjourney, DALL-e, Stable Diffusion, to name a few. The landscape of tools is ever-changing with new tools emerging every day

and others being sunset after a short-lived life. The speed at which the market is transforming makes it hard to keep up with all the tool opportunities we can leverage in our work, but some of them are stable enough to be a safe bet for sticking around for a while; Bard, GPT, Copilot, probably here to stay. And I'm excited to see how this prediction ages.

Generative AI works through a process that involves learning patterns, structures, and relationships from extensive datasets. This process allows the AI model to generate new and often highly realistic outputs. Here's a simplified breakdown of how generative AI works:

Generative AI relies on neural networks, which are computational models inspired by the human brain. These networks consist of interconnected nodes or artificial neurons that process information. Learn more about neural networks in the following video.

Neural networks are a type of artificial intelligence that can learn from data and perform various tasks, such as recognizing faces, translating languages, playing games and more. Neural networks are inspired by the structure and function of the human brain, which consists of billions of interconnected cells called neurons. They are made up of layers of artificial neurons that process and transmit information between each other. Neuronal networks can be trained using different algorithms

and architectures depending on the task and the data. Neural networks have many applications in various domains, such as image recognition, speech recognition, machine translation, natural language processing, medical diagnosis, financial forecasting, quality control, gaming and more. For example, Facebook uses a deep recognition system called Deep Face.

Google Translate uses a neural machine translation system with an encoded decoder architecture. Google DeepMind developed AlphaGo, a deep reinforcement learning system that defeated the world champion of Go. Neural networks are powerful tools for artificial intelligence. Because they can adapt to new data and situations, generalize from previous examples, and discover hidden patterns and features in the data. However, they face challenges and limitations

such as explainability, robustness, scalability and ethics. Some possible directions for the future of neural networks include neuroevolution, symbolic AI integration, and generative models. Neuroevolution is a form of artificial intelligence that uses evolutionary algorithms to generate artificial neural networks, parameters and rules. It is often applied in artificial life and general game playing.

Symbolic AI integration is a type of artificial intelligence that combines neural and symbolic AI architectures to address the weaknesses of each providing a robust AI capable of reasoning, learning, and cognitive modeling. These can be useful in autonomous cars and medical diagnosis systems. Generative models are a type of machine learning model that generates new data that is similar to a given dataset.

They can capture the underlying patterns or distributions from the data and provide realistic fake data that shares similar characteristics with the original data.

Generative Models

The core of generative AI lies in generative models, with two prominent types being Generative Adversarial Networks (GANs) and Variational Autoencoders (VAEs).

GANs, introduced in 2014, have a generator and a discriminator working against each other to make content look real. They improve together until the generator fools the discriminator. GANs are great for realistic and varied outputs in art and design.

© Interaction Design Foundation, CC BY-SA 4.0

On the other hand, VAEs, introduced in 2013, have an encoder and a decoder. The encoder turns input into a variable space, adding diversity. Then, the decoder reconstructs the input. VAEs are good for varied and realistic outputs for images and creative tasks. Both GANs and VAEs are essential in AI to create diverse and lifelike content.

© Interaction Design Foundation, CC BY-SA 4.0

GANs are ideal for high-quality image generation, like photorealistic images, or to modify image attributes. They are used in tasks like style transfer, photo-realistic rendering, or creating entirely new images from scratch. VAEs are useful for tasks where understanding the data's underlying structure is important, like data denoising, anomaly detection, or generating new data with controlled variations.

Specific software tools or platforms might prefer one over the other based on the application. For instance, tools for realistic image generation might lean towards GANs, whereas those for data analysis or less photorealistic image manipulation might use VAEs.

Designers should choose between GANs and VAEs based on the specific requirements of their project, considering factors like the desired output quality, the level of control over the output, and the nature of the data used by the AI.

Training Process

© Interaction Design Foundation, CC BY-SA 4.0

Generative AI is trained on large datasets containing examples of the type of content it's intended to generate. During training, the model learns to identify patterns, styles, and features in the data. The learning process involves adjusting the parameters of the neural network to minimize the difference between the generated content and the real data.

Learning from Data

The model learns by exposure to diverse and representative datasets, which enables it to generalize its understanding and generate content that aligns with the characteristics of the training data. This learning phase is critical for the model to produce coherent and relevant outputs.

Fine-Tuning and Refinement

After the initial training, generative AI models often undergo fine-tuning to improve their performance. This refinement process involves the adjustment of parameters, optimization of algorithms, and sometimes the incorporation of feedback mechanisms.

Generation of New Content

© Interaction Design Foundation, CC BY-SA 4.0

Once trained, the generative AI model can autonomously produce new content based on its learned understanding of the patterns and structures present in the training data. Depending on the application, this content may range from realistic images to coherent text and even music.

Ioana Teleanu shares how designers can incorporate AI into their design process in this video.

Before showing you an end to an AI-powered design process. That still happens under human guidance, which is the key point in this conversation. Let's go quickly over how AI can help us. So for ones that can decrease cognitive load, aid decision making by processing large volumes of data, can help us automate repetitive tasks such as formatting images or resizing text.

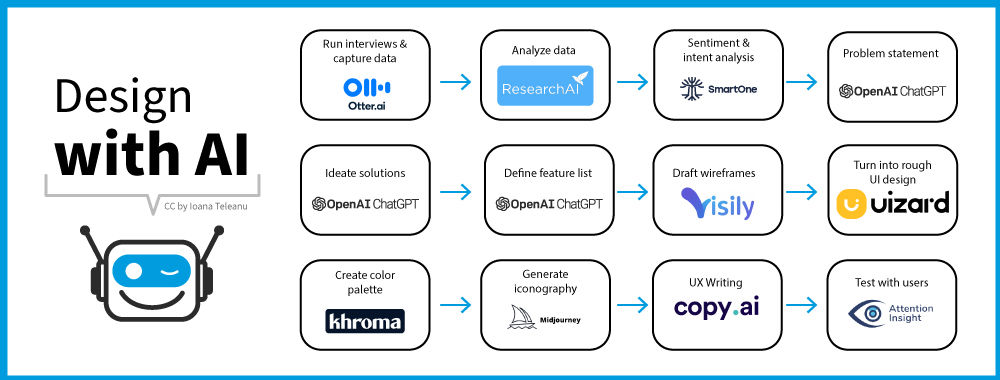

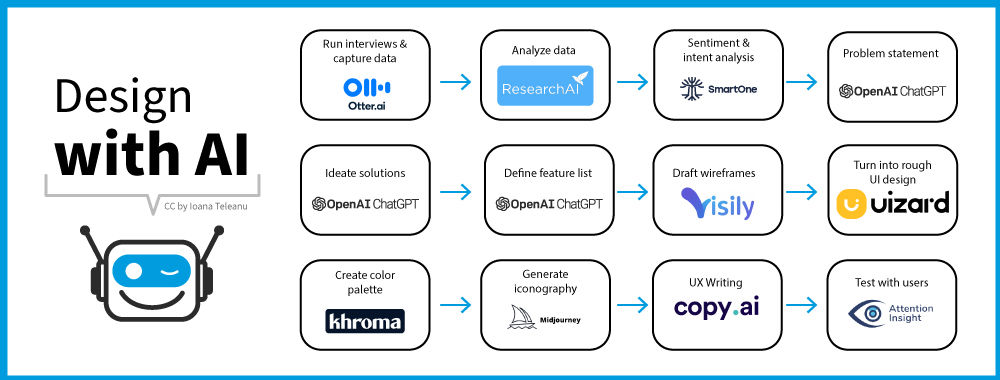

It can help us by providing more insights into human behavior and usage patterns. Also, with creating prototypes or mockups, all kinds of visual assets, it can spot usability issues and so on. AI can help us at every step of the design process. Covering our blind spots, augmenting our thinking, making us more efficient if we use it correctly. Here's how an end to end design process augmented by AI may look like. But the key to reading this schema are how I prefer to look at it:

there's always a person orchestrating this. The future in which AI could generate a cohesive, coherent, reliable and relevant design process end to end is a very distant future for now, and there's an impetuous need for someone governing over this process, applying critical thinking and showing intentionality at every stage of the design process. Also, because AI shows multiple limitations

on each of the steps shown in the schema. Nielsen Norman Group published an article unpacking the limits that currently surround the use of AI in research. To understand the context of their analysis. You need to know that there are currently two type of AI powered research tools we currently see on the market: insight generators and collaborators. AI Insight generators. These tools summarize user research sessions based only on the transcripts.

Since they don't accept any kind of additional information (context, past research, background information about the product and users and so on), they can be highly problematic in how they generate and present those summaries. While there are some workarounds like uploading background information as session notes to be added to the analysis, it's not the right framing for the source and it's not going to reflect correctly in the analysis and generation. Humans would be much better at this. The scoping and systems

thinking required to understand the interpretation landscape AI collaborators. These work similar to insight generators, but they're slightly better because they accept some contextual information provided by the researcher. For instance, the researcher might show to the AI some human generated interpretation to train it. The tool can then recommend tags for the thematic analysis of the data in addition to session transcripts, collaborators can also analyze researchers notes

and then create themes and insights based on input from multiple sources. But even though they appear to be a bit better, they're still significantly limited and pose a lot of problems, if not used with the right mindset and caution. The limitations they've identified and expand on in detail are: most AI tools can't process visual input, and the biggest problem with that is no human or AI tool can analyze usability testing sessions by the transcript alone.

Usability testing is a method that inherently relies on observing how the user is interacting with the product. Participants often think-aloud describing what they're doing and thinking. Their words do provide valuable information. However, you should never analyze usability tests based only on what participants say. Transcript-only analysis misses important context in user tests because participants don't verbalize all their actions don't describe every element in the product. Not always have a clear understanding or mental model of the product.

So for now, Nielsen Norman's group recommendation is do not trust AI tools that claim to be able to analyze usability testing sessions by transcripts alone. Future tools able to process video visuals will be much more useful for this method. Another problem is the limited understanding of the context. This remains a major problem. AI insight generators don't yet accept the study goals or research question insights or tags from previous

rounds of research, background information about a product or the user groups, contextual information about each participant, new user versus existing user, the list of tasks or interview questions. There is also a problem with the lack of citation and validation, which raises multiple concerns and problems. The tools aren't able to differentiate between the researchers notes and the actual session transcript. A major ethical concern here. We must always clearly separate our own interpretation or assumptions from what the participants said or did.

Another problem with the lack of citation is that it makes verifying accuracy very difficult. AI systems can sometimes produce information that sounds very plausible, but is actually incorrect. Unstable performance and usability issues are another problem. None of the tools they tested had solid usability or performance. They reported outages, errors and unstable performance in general. And then there's the problem of bias. According to Reva Schwartz and her colleagues, AI systems and applications can involve biases

at three levels: systematic, statistical and computational and human biases. AI must be trained on data which can introduce systematic such as historical and institutional and statistical biases, like a dataset sampling that is unrepresentative enough. When people are using a AI-powered results in decision making they can bring in human biases like anchoring bias. So bias can creep into research efforts on multiple levels,

and these tools don't yet have the mechanisms in place to prevent that. I wanted to discuss the limitations reported in the article in detail, because I believe we can easily extrapolate and expand them beyond just research tools. Most of these problems will be observable on other types of AI companions in the design process. Biases in image generation, limitations in being offered context, other kinds of input limitations, not accepting files or images,

output vagueness, generic results, and so on. So I think that this is a necessary frame to keep in mind when interacting and designing with the help of AI. Tools are not very reliable yet and accurate. So take everything they produce with a grain of salt and apply critical thinking at all times.

Designers can leverage Generative AI in various impactful ways, some of which are:

Idea Generation: Generative AI can quickly produce a range of design options, providing inspiration and starting points for projects.

Prototyping and Testing: Use Generative AI to create multiple design prototypes, enabling rapid testing and iteration.

Customization and Personalization: Tailor designs to specific user preferences or requirements by training Generative AI with relevant data.

Efficiency and Productivity: Automate repetitive design tasks, freeing time for more creative and complex aspects of design work.

Explore New Design Frontiers: Experiment with innovative design forms and patterns that may take time to be obvious and intuitive to human designers.

While Generative AI is a powerful tool, it's essential to maintain a balance. Designers must drive the design process and use AI as a collaborative partner.

There are several programs that use Generative AI for a wide range of applications, from content creation to the enhancement of traditional software capabilities. See some of these programs in the following video.

Here are some examples of tools that use Generative AI:

Adobe Photoshop: Adobe has integrated AI features into Photoshop, which enables tasks like content-aware fill, which intelligently fills portions of an image based on its surroundings.

Autodesk: Autodesk uses AI in its CAD and 3D modeling software to optimize design processes, such as generative design in Fusion 360, which allows designers to explore multiple design iterations based on specific constraints and requirements.

GPT by OpenAI: While primarily known for text generation, GPT has been used in various applications, including the generation of code, writing, and even aiding in creative brainstorming processes.

Generative AI can create completely original designs. It learns from a vast array of existing data and patterns and then uses this knowledge to generate new designs that are unique and have not been explicitly programmed by humans.

However, it's essential to understand that the originality of these designs is influenced by the data on which it has been trained. The AI's output reflects its input; it remixes and reinterprets existing styles, patterns, and elements to create something new. While generative AI can provide designs that appear original, designers should critically assess and refine these outputs to ensure they meet specific design goals and maintain a human touch in their work.

In the field of AI-generated art, projects like Unsupervised by artist Refik Anadol showcase the impressive capabilities of generative AI. Using a unique AI model trained on data from the Museum of Modern Art (MoMA), Unsupervised produces abstract artworks, illustrating the intersection of art and advanced AI research. However, this creative potential raises concerns about transparency and ethics. Anadol addresses the copyright debate by openly recognizing the collaborative role of the AI model, StyleGAN2 ADA, and the human artist. Unsupervised adopts a shared authorship model, providing clarity in navigating the evolving landscape of art copyright for AI-generated art.

It's crucial to understand that while Generative AI is a powerful tool, it's not without its flaws. One significant limitation is the dependency on data quality. The outputs of generative AI are only as good as the data it's trained on. If the training data is limited, biased, or of poor quality, the AI's outputs will reflect these flaws. This can lead to designs that are not only substandard but potentially biased or culturally insensitive.

Another key limitation is the lack of true creativity and intuition. Generative AI can produce novel designs, but it doesn't truly “understand” what it's creating. It lacks the ability to comprehend the cultural, emotional, or contextual significance of a design, something that's second nature to human designers. This means that while AI can assist in the design process, it can't replace the nuanced understanding and creative intuition of a human designer.

Additionally, there are ethical and legal concerns. AI-generated designs might inadvertently replicate elements that are copyrighted, leading to potential legal issues. There's also the risk of over-reliance on AI, which could lead to a decline in human skill development and an undervaluing of human creativity.

Generative AI is a fantastic tool that can enhance and streamline the design process but it's important to use it as a collaborative partner, not a replacement. Designers need to understand Generative AI’s limitations to harness its power effectively while maintaining the irreplaceable value of human creativity and judgment in the design process.

There are many ways that Generative AI might evolve in the coming years. One key area of development could be its seamless integration into design tools. This integration would not only streamline the design process but also enhance collaboration. Designers would be able to quickly iterate designs, with AI providing options and suggestions based on your inputs.

Another key area is personalization. Generative AI might get even better at understanding individual preferences and cultural contexts. This means designs would become more personalized and user-centric, catering to specific needs and tastes. Designers might be able to create websites that change its layout and style based on who's viewing it, or products that adapt their features to suit each user.

Generative AI could also expand into newfields like 3D modeling, VR, and AR. This expansion opens up thrilling possibilities for designers, allowing you to create immersive experiences and explore design in entirely new dimensions.

Moreover, as ethical and sustainability considerations become increasingly important, generative AI could become a vital tool to make designs more eco-friendly and socially responsible. By analyzing complex datasets, AI could help identify sustainable materials and ethical design practices.

As generative AI shapes the future of design, it's essential to navigate emerging ethical and legal challenges. Designers will need to balance innovation with respect for intellectual property rights and ethical considerations, while upholding core design principles.

In this video, UX design pioneer Don Norman, talks about how we can collaborate with AI and highlights that the AI apps designers use need human input.

Take our AI for Designers course to learn more about how to incorporate AI into the design process.

To learn more about generative AI, read What is generative AI? by IBM and What is generative AI by Caltech.

To learn more about the types of conversations users engage with Generative AI, read The 6 Types of Conversations with Generative AI.

To learn more about generative AI and copyright, read, Generative AI meets copyright

Generative AI is a branch of artificial intelligence focused on creating new content. It learns from large datasets to generate outputs like images, text, and music. This technology is instrumental in design, where it can assist in developing visual elements and user interfaces.

For designers, generative AI offers a powerful tool to generate design ideas and get inspiration quickly. It's essential to use it to complement human creativity, not as a replacement.

Take our AI for Designers course to learn more about AI.

Generative AI distinguishes itself from other AI forms by its unique ability to create new content, unlike traditional AI which primarily analyzes or processes existing data. Generative AI, exemplified by models like Generative Adversarial Networks (GANs), excels in generating novel data such as images, text, or music. This contrasts with other AI models that focus on analyzing user behavior, interpreting data, or automating routine tasks. Generative AI's trial-and-error learning approach, where it continually refines its output based on feedback, also sets it apart from other AI that might rely on supervised or unsupervised learning methods.

To learn more about generative AI, read What is generative AI? By IBM and take our AI for Designers course to learn more about AI.

Generative AI significantly impacts UI/UX design, primarily by automating and enhancing design processes. It enables rapid generation of diverse design options, aiding in brainstorming and prototyping. This technology facilitates the creation of personalized UI/UX designs by analyzing user data, thereby improving user engagement. Additionally, it aids in efficiently producing design iterations, allowing for experimentation with various layouts and color schemes.

In practical terms, designers can utilize generative AI for several purposes. Firstly, AI-driven analytics tools can provide valuable insights into user behavior, guiding design decisions to enhance user experience. Secondly, AI can be instrumental in ensuring designs are accessible and inclusive, catering to a wide range of users. Lastly, automated testing through AI can identify potential issues in UI/UX elements, ensuring both functionality and user satisfaction.

Take our AI for Designers course to learn more about AI.

To effectively use Generative AI in design, a combination of technical and creative skills is essential. Technical skills include a basic understanding of machine learning and AI principles, as well as proficiency in using design software that integrates AI technologies. Designers should also have a grasp of data handling, as Generative AI relies heavily on data inputs to create outputs. These technical skills enable designers to effectively harness AI tools, customize them for specific tasks, and interpret their outputs accurately.

On the creative side, strong foundational design skills are crucial. This encompasses knowledge of design principles, color theory, typography, and user experience design. Designers must have the ability to critically evaluate AI-generated outputs, ensuring that they align with the project's aesthetic and functional requirements.

Take our AI for Designers course to learn more about AI.

Generative AI in product design primarily boosts creativity and efficiency. It enables rapid generation of diverse design options, pushing the boundaries of innovation. This leads to more imaginative, meaningful and user-centric products.

Additionally, AI automates routine tasks, something that allows designers to focus on refining designs and enhancing user experience, while AI-driven simulations help in early identification of design flaws, saving time and improving product quality.

Take our AI for Designers course to learn more about AI.

Generative AI streamlines prototyping by enabling the quick generation of multiple design iterations, which allows for efficient exploration of various layouts and styles. It integrates user feedback to refine prototypes and ensures they meet user needs.

In wireframing, AI assists in creating detailed frameworks, suggesting layouts and UI elements based on extensive design data. This accelerates the wireframing process and introduces data-driven insights, enhancing usability and the final product's effectiveness.

Take our AI for Designers course to learn more about AI.

Some common tools and platforms for Generative AI in design include:

Adobe Sensei: Integrated into various Adobe products, Sensei uses AI and machine learning to automate complex design tasks, enhance creativity, and improve workflow efficiency.

RunwayML: A platform that offers easy access to cutting-edge machine learning models, enabling designers and artists to experiment with AI without needing extensive programming knowledge.

DeepArt: Specializes in transforming photos into artworks using the styles of famous artists through deep learning algorithms.

Artbreeder: A collaborative platform that allows users to create and manipulate images, including portraits, landscapes, and objects, through generative AI techniques.

GPT by OpenAI: While primarily known for text generation, GPT has been used in design for generating content for websites, marketing material, and even ideation for design concepts.

Figma’s FigJam: While not exclusively an AI tool, FigJam integrates AI functionality for assisting in the collaborative design process, from brainstorming to wireframing.

These tools and platforms are revolutionizing the way designers approach creative tasks, offering new possibilities for innovation and efficiency in the design process.

Take our AI for Designers course to learn more about AI.

Yes, ChatGPT is a generative AI tool. It is based on the GPT (Generative Pre-trained Transformer) architecture developed by OpenAI. ChatGPT is designed to generate text based on the input it receives. It can create coherent and contextually relevant responses, making it suitable for a wide range of applications including conversation simulation, content creation, and answering queries. The tool leverages advanced machine learning techniques to understand and respond to text inputs, making it a powerful example of generative AI in action.

In the context of design, while ChatGPT is not specifically tailored for visual design tasks like prototyping or wireframing, it can assist in areas such as content generation for UI elements, creating descriptive texts, generating ideas for design concepts, and even providing guidance or answering questions about design principles and practices.

Take our AI for Designers course to learn more about AI.

Ethical considerations in using Generative AI in design focus on originality and data privacy. Designers must ensure AI-generated designs don’t infringe on intellectual property and maintain creative authenticity. Additionally, the use of data in Generative AI requires adherence to privacy laws and ethical standards, ensuring data is collected and used responsibly to avoid biases and uphold user privacy.

Take our AI for Designers course to learn more about AI.

Generative AI's impact on intellectual property rights in design presents a complex challenge. On one hand, AI can generate new designs by learning from existing data, which raises concerns about the originality and ownership of these AI-created works. Determining the authorship of designs generated by AI is complicated, as they are products of algorithms trained on pre-existing works. This blurs the lines of copyright and raises questions about whether AI-generated designs can be considered entirely new creations or derivatives of their training data.

On the other hand, AI's ability to mimic styles and elements from existing designs can lead to potential copyright infringements. Ensure that AI-generated designs do not unintentionally replicate copyrighted elements too closely. This requires designers and AI developers to be vigilant about the data used to train AI models and the outputs they produce. Additionally, there's an ongoing debate about whether laws need to evolve to address the unique challenges posed by AI in the creative process, including the need for clear guidelines on the ownership and copyright of AI-generated content.

Take our AI for Designers course to learn more about AI.

Emerging trends in generative AI for design are reshaping how designers approach creativity and problem-solving:

Increased Personalization: AI is being used to create highly personalized user experiences and designs. By analyzing user data, AI can tailor designs to individual preferences, enhancing user engagement and satisfaction.

Collaborative AI Design Tools: The integration of AI into design tools is making them smarter and more intuitive. These tools can now assist designers with suggestions, automate routine tasks, and even generate complete design concepts.

AI in User Research and Testing: AI algorithms are increasingly used to analyze user behavior and testing data, providing insights that guide design decisions and improve user experiences.

Sustainable Design: AI is being used to create designs that are not only aesthetically pleasing but also environmentally sustainable, by optimizing materials and processes for minimal environmental impact.

Interactive and Dynamic Designs: Generative AI enables the creation of designs that can change and evolve in real-time based on user interactions, leading to more dynamic and engaging user experiences.

3D and Spatial Design: With the rise of virtual and augmented reality, AI is being used to generate immersive and complex 3D environments and objects.

These trends show a future where AI not only assists in the design process but also enables new forms of creativity and interaction, pushing the boundaries of what's possible in design.

Take our AI for Designers course to learn more about AI.

Remember, the more you learn about design, the more you make yourself valuable.

Improve your UX / UI Design skills and grow your career! Join IxDF now!

You earned your gift with a perfect score! Let us send it to you.

We've emailed your gift to name@email.com.

Improve your UX / UI Design skills and grow your career! Join IxDF now!

Here's the entire UX literature on Generative AI by the Interaction Design Foundation, collated in one place:

Take a deep dive into Generative AI with our course AI for Designers .

In an era where technology is rapidly reshaping the way we interact with the world, understanding the intricacies of AI is not just a skill, but a necessity for designers. The AI for Designers course delves into the heart of this game-changing field, empowering you to navigate the complexities of designing in the age of AI. Why is this knowledge vital? AI is not just a tool; it's a paradigm shift, revolutionizing the design landscape. As a designer, make sure that you not only keep pace with the ever-evolving tech landscape but also lead the way in creating user experiences that are intuitive, intelligent, and ethical.

AI for Designers is taught by Ioana Teleanu, a seasoned AI Product Designer and Design Educator who has established a community of over 250,000 UX enthusiasts through her social channel UX Goodies. She imparts her extensive expertise to this course from her experience at renowned companies like UiPath and ING Bank, and now works on pioneering AI projects at Miro.

In this course, you’ll explore how to work with AI in harmony and incorporate it into your design process to elevate your career to new heights. Welcome to a course that doesn’t just teach design; it shapes the future of design innovation.

In lesson 1, you’ll explore AI's significance, understand key terms like Machine Learning, Deep Learning, and Generative AI, discover AI's impact on design, and master the art of creating effective text prompts for design.

In lesson 2, you’ll learn how to enhance your design workflow using AI tools for UX research, including market analysis, persona interviews, and data processing. You’ll dive into problem-solving with AI, mastering problem definition and production ideation.

In lesson 3, you’ll discover how to incorporate AI tools for prototyping, wireframing, visual design, and UX writing into your design process. You’ll learn how AI can assist to evaluate your designs and automate tasks, and ensure your product is launch-ready.

In lesson 4, you’ll explore the designer's role in AI-driven solutions, how to address challenges, analyze concerns, and deliver ethical solutions for real-world design applications.

Throughout the course, you'll receive practical tips for real-life projects. In the Build Your Portfolio exercises, you’ll practice how to integrate AI tools into your workflow and design for AI products, enabling you to create a compelling portfolio case study to attract potential employers or collaborators.

We believe in Open Access and the democratization of knowledge. Unfortunately, world-class educational materials such as this page are normally hidden behind paywalls or in expensive textbooks.

If you want this to change, , link to us, or join us to help us democratize design knowledge!